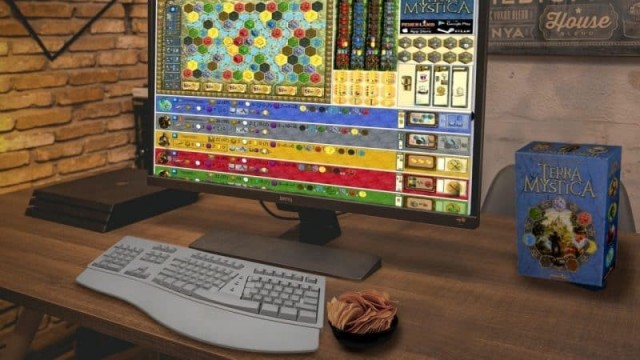

What does rating games really mean?

I have a problem with game ratings. Not the concept: as long as people choose to partition games into various levels of "goodness" or "badness" there will be a demand for scoring systems. As a player, I appreciate it; as a designer, I accept it; as a scientist, I wish we were better at it. And we can be. It's just hard. I'll grind my scientific axe first, then propose a few rating systems that I think would prove more useful than those we typically find today. Slapping down a rating scale open to all comers without controls and absent support leaves the entire system vulnerable to a variety of ill influences. Beyond conditionality and motivational biases, which are always present, numerical rating systems typically fall prey to issues arising from value nonlinearity and inconsistent criteria reduction.

Let's start with conditionality bias. Every assessment we make is conditioned on the public and private information we have available at the time. Suppose you're asked about the best place to park downtown. You'll base your assessment on public knowledge (e.g., local lots, garages, side streets) and private information (e.g., garage X has a broken gate, lot Y fills up by 10 AM, side street Z has alternate parking). Some of your private information will include your personal preferences (e.g., to park above ground rather than underground). Unless we take the time to specify and share our private information, it is unreasonable to think that our assessment of the best place to park will be the same.

Even given common information, the rating scales themselves can present problems. Two that almost always plague numerical scales are a lack of common reference points and a presumption of value linearity. With regard to reference points, raters with different "starting points" are unlikely to express the same rating with the same number. If you and I both feel the same way about a game and use the same well-defined rubric to assign a numerical score, we stand a good chance of picking the same number. However, if I make my ratings starting at 5 and adjust away from 5 based on things I like or dislike, and you start at 10 and deduct points for things you dislike, it's highly unlikely that we will agree on what a 7 means. My 7 will be driven by accolades; yours, by indictments. The assumption of value linearity compounds this problem. When presented with a numerical scale, people typically presume that the incremental value derived from a rating increase from X to X+1 is the same regardless of the value of X. That is, the added value expressed in going from a rating of 1 to 2 is the same as that expressed in going from a rating of 6 to 7. This rarely holds true. On a ten point scale, there is seldom any real difference between ratings of 1 or 2 or between ratings of 9 or 10; however, going from a rating of 7 to 8 can present a challenging hurdle.

The greatest problem facing numerical scales is the distillation of multiple assessments into a single number. Rating a game ultimately comes down to assessing the game's merit across multiple criteria. We can measure a game's table footprint using square centimeters, its physical weight in kilograms, and its play duration in hours. But how should we characterize its difficulty, complexity, or quality? How do we take into account the milling of wooden components, the number of decisions per turn, the number of alternatives per decision, the manner in which uncertainties are resolved, the durability of the cards, and so forth? Integrating several important attributes into a single score requires careful weighting and scaling which, by nature, yields wide variability across assessors.

For example, suppose you and I agree to assess a game based only on its "thematic value" and "playability," and that both of these attributes will be scored on a mutually accepted linear scale from 1 to 10. Suppose further that we agree that the game scores a 4 on theme and an 8 on playability. On a straight average, the game would score a 6. This presumes a 50/50 weighting on theme and playability. Suppose, however, that I value theme much more than playability and you value playability much more than theme. In fact, let's just say that our weights are 80/20 and 30/70, respectively. My rating would be 4.8 and yours would be 6.8. That's a huge difference. Even if we had a well-designed numerical rating system, common information, common reference points, common marginal value functions, and common weights across a set of common criteria, we'd still have problems. We would also have to share common preferences.

Regardless of our desire for objectivity, we cannot discount the influence of our personal preferences and the private motivations they drive. If I hate war games, it is unlikely that I will give a war game as high a rating as someone else who loves them. This may or may not be the consequence of conscious choices. In some cases, my distaste may be expressed in a low score for a specific attribute (e.g., game complexity); in other cases, my dislike may be manifest in an explicit penalty simply because "I don't like these kinds of games." Unless people take time to leave detailed comments when they drop a numerical rating, we really don't know what underlies their assessment. It could be a great game that they didn't like because they are sick and tired of zombies, or a terrible game that they loved because they adore all things Cthulhu.

So, what's the solution? How are we to talk about average scores and variances and skewness and modes and medians and all sorts of cool statistical measures without a numerical scale? Simple: we don't. Without clear and appropriate context, ratings are at best worthless. Even so, the desire behind their use is valid: people want to know how a game stacks up before they commit to playing or buying it. In descending order, my preferences for satisfying this need are: written reviews, Likert-type scales, categorical scoring, and augmented stars.

For depth and completeness with solid context, it's hard to beat a well-crafted written review. I find them to be more thoughtful and articulate than audio or video reviews, especially when it's never clear to me whether the person I'm listening to or watching has actually played the game in question or is just recycling words lifted from other reviewers or the back of the box. Whether or not I agree with their assessment, a reviewer's familiarity with and mastery of a game is readily apparent in a written review, and that alone makes it more valuable to me. Unfortunately, the time, talent, and dedication required to produce high quality written reviews precludes their timely availability in the current market environment.

In the absence of a written review, I recommend a set of Likert-type scales for differentiating games. Examples of these types of scales include pain scales where you are asked to choose the face that best describes your level of pain, or statements for which you are asked to put a check mark on a line to represent your degree of agreement between endpoints like "strongly agree" and "strongly disagree." For appraising a game, a set of scales might include attributes such as duration of play, depth of theme, richness of decision space, etc. For each attribute, the reviewer would make a mark on the scale to denote where they believe the game falls between the worst and best rating for that attribute. Under this approach, the final assessment of a game would be a set of marks across the set of evaluation attributes rather than a single number representing the reviewer's overall impression. This focuses the assessment on a game's characteristics and limits the influence of the reviewer's preference for some characteristics over others.

A categorical scoring system is easier to present and manage than a set of Likert-type scales, but its credibility and usefulness depends entirely on the underlying rubric that defines category assignment. Such a rubric must be well-written, easily understood, and clearly explain the criteria for inclusion in each category. Although it may be deployed on its own, a categorical rating system is well-served by supplementary text expressing the reviewer's reasons for placing a game in a specific category. It's one thing to assign a game to the Very Good category, it's quite another thing to assign it to the Very Good category with the caveat: "Although this game meets most of the criteria for Excellent, I find that I can only give it a Very Good due to its cumbersome combat resolution and overly complicated post-combat updating requirements. If these factors do not bother you, then you may find this game to be Excellent."

The simplest evaluation approach is to give a game some number of stars out of a maximum number to represent its relative merit. In and of itself, this type of assessment method is fraught with bias, obscurity, and ambiguity. However, five easy modifications can transform a simple star system into a robust rating method. First, use 5 stars. This provides a sufficient level of discrimination on either side of a 3-star middle score. Second, provide a readily accessible, clear, and well-defined rubric to differentiate between the stars. Third, make the 3-star middle score the reference point for all assessments: the reviewer may add or deduct stars as appropriate given the rubric, but their assessment will always begin at 3 stars. Fourth, use two colors of stars, say blue and red (or gray and black if color is unavailable), to represent the reviewer's preferences: if the game is of a type they typically like and play, use blue stars; if the game is of a type that they typically dislike or don't play, use red stars. Last, use the star's interior to illustrate the reviewer's dedication to their assessment: if they haven't played the game at least three times, use hollow stars; three to five times, use a light fill; more than five times, use a dark fill. Taken together, these five modifications to a star rating should enable the reader to better interpret and appreciate the rating provided. A rating of 3 solid red stars would mean a lot more to me than a rating of 4 hollow blue stars.

To paraphrase Tom Lehrer, a rating system is like a sewer: what you get out of it depends on what you put into it. For some, it's all about numbers. Numbers in and numbers out. For me, I'd like something a little more meaningful.

Games

Games How to resolve AdBlock issue?

How to resolve AdBlock issue?